Load balancing is crucial in distributing incoming network traffic across multiple servers or resources to ensure efficient utilization, optimize resource usage, and prevent overload on any single server. Several load balancing strategies exist, each suited for specific scenarios:

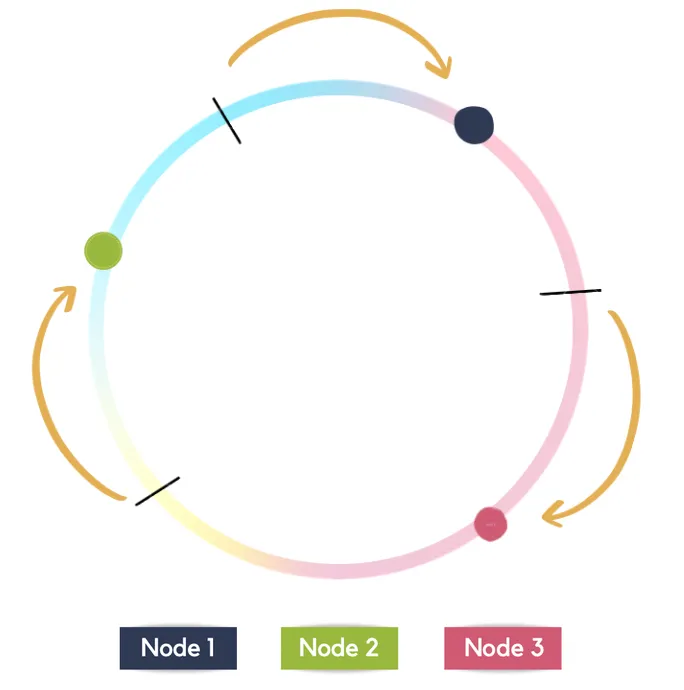

- Round Robin: Requests are distributed sequentially among servers in a circular order. It's simple and ensures an equal distribution of load but might not consider the server's current load or capacity.

- Least Connections: Traffic is directed to the server with the fewest active connections. This strategy ensures that the load is distributed to the least loaded servers, promoting better resource utilization.

- Weighted Round Robin: Servers are assigned weights, specifying their capacity or processing power. Requests are then distributed based on these weights, allowing more traffic to higher-capacity servers.

- IP Hashing: The client's IP address determines which server receives the request. This ensures that requests from the same client are consistently sent to the same server, aiding session persistence.

- Least Response Time: Requests are directed to the server that currently has the shortest response time or the fastest processing capability. This strategy optimizes performance for end users.

- Resource-based Load Balancing: Takes into account server resource utilization metrics (CPU, memory, etc.) and directs traffic to servers with available resources, preventing overload and maximizing performance.

- Dynamic Load Balancing Algorithms: These algorithms adapt in real-time to changing server conditions. They can factor in various metrics like server health, latency, and throughput to dynamically adjust traffic distribution.

- Content-based or Application-aware Load Balancing: Analyzes the content or context of requests to intelligently route traffic. For instance, it can direct video streaming requests to servers optimized for video processing.